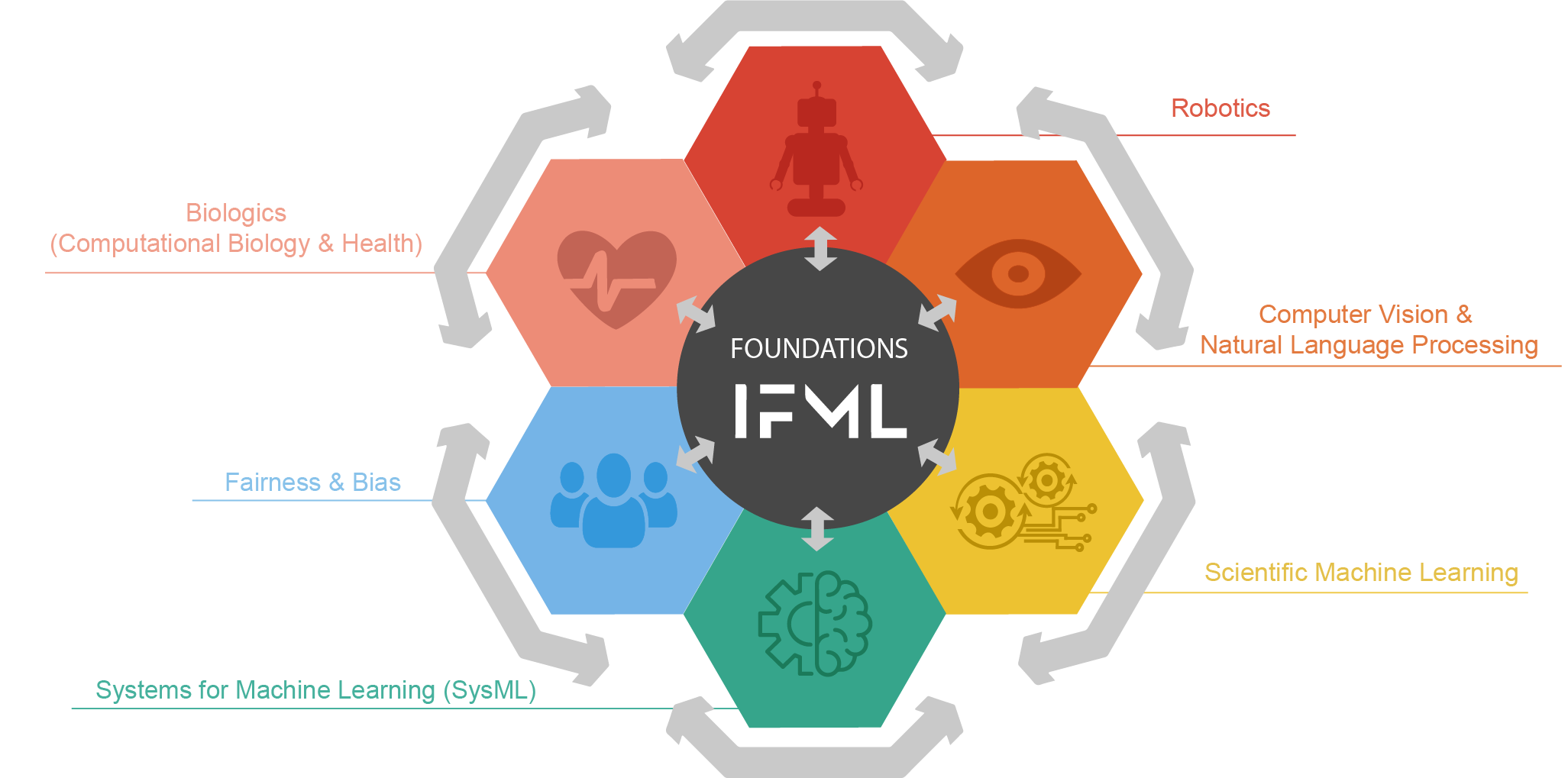

Research Agenda

The Machine Learning Laboratory will focus its initial research agenda on core algorithmic advances and a select set of applications that reflect the strengths and capabilities of UT Austin. The agenda will remain fluid in order to respond to the evolving uses of machine learning and related technologies.

The Fundamentals

Algorithms & Deep Learning

Deep learning algorithms have brought about breakthrough performance benchmarks on common computer vision tasks and superhuman performance at playing the game Go. Major research goals in this area will include 1) understanding mathematically why algorithms for training deep networks succeed and 2) how to develop more powerful algorithmic tools.

Optimization

Most widely-used machine learning systems are based on simple tools from optimization that are often decades old. We seek to understand the conditions under which these simple algorithms can globally minimize highly nonconvex functions that arise when training models. We will also focus on speeding up more sophisticated optimization tools for viable use on large data sets.

Interpretability & Fairness

As machine learning based models grow in complexity and influence on people’s lives, algorithmic transparency will be crucial in allowing humans to detect and correct erroneous classifications and recognize unfair biases embedded in systems. Improving the interpretability of classifiers to address fairness and discrimination will be a central part of our research.

Reinforcement Learning

We are far from fully understanding how best to empower computers and robots to learn how to act efficiently in various real world situations. We will focus on pressing questions such as how to reduce the computational and sample (data) complexity of RL algorithms, and how to analyze expected and worst-case performance, making them applicable to real-world domains.

Scalability (HPC)

We will focus on scaling current methods to discover viable next-generation architectures for massive data sets. This includes algorithmic research aimed at increasing parallelism and performance, leveraging supercomputing resources, and exploring novel hardware architectures for cost-effective high performance for both training and application of next-generation ML techniques.